Synology NAS Logging Woes: When the Logs Hit the Fan

Ever had your Synology NAS throw a tantrum over logs? Dive into how a hidden 200MB disk quota broke everything- until a little detective work with df -h, du, and some log trimming saved the day. Check out the full story on how I fixed the mess!

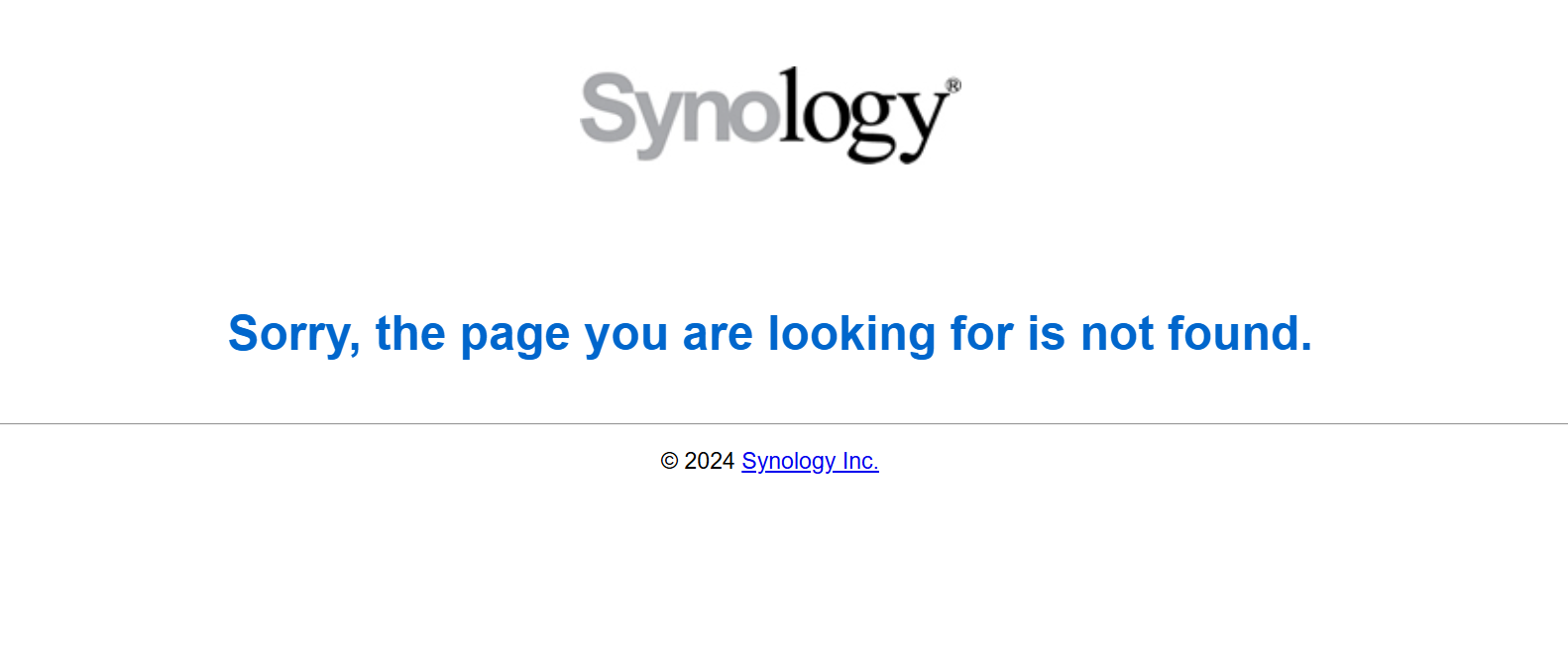

So, you’ve just bought a shiny Synology NAS, excited to get your hands dirty with some advanced data management, and then—bam!—you’re hit with the dreaded "Sorry, the page you are looking for is not found" error. But wait—this isn’t just any run-of-the-mill error. This is Synology Photos refusing to cooperate, and it’s just the beginning of a truly frustrating week. 😤

Let me take you down this rabbit hole of logging madness, a journey that’s been way too surreal for comfort. Let’s see how

Synology’s logging system decided to go haywire, how the logs vanished into oblivion, and how I went full detective mode to fix it (spoiler alert: not without drama).

🕵️♂️ The Initial "WTF" Moment

It started innocently enough. Synology Photos stopped working, but I didn't realize until I tried to open the web UI and was greeted with the “Sorry, the page you are looking for is not found” message. A simple Google search didn’t show much, so I dove into the logs, hoping for some insight. What did I find? Nothing. Absolutely nothing. The Synology Application Service was in need of a repair, but every time I tried the repair option, it refused to cooperate.

"Okay," I thought, "Maybe I need to reinstall some dependencies, like Node.js, and try again." But guess what? Even that failed. This is when I decided to SSH into my Synology NAS to see what was really going on. Spoiler: it wasn’t pretty. 😒

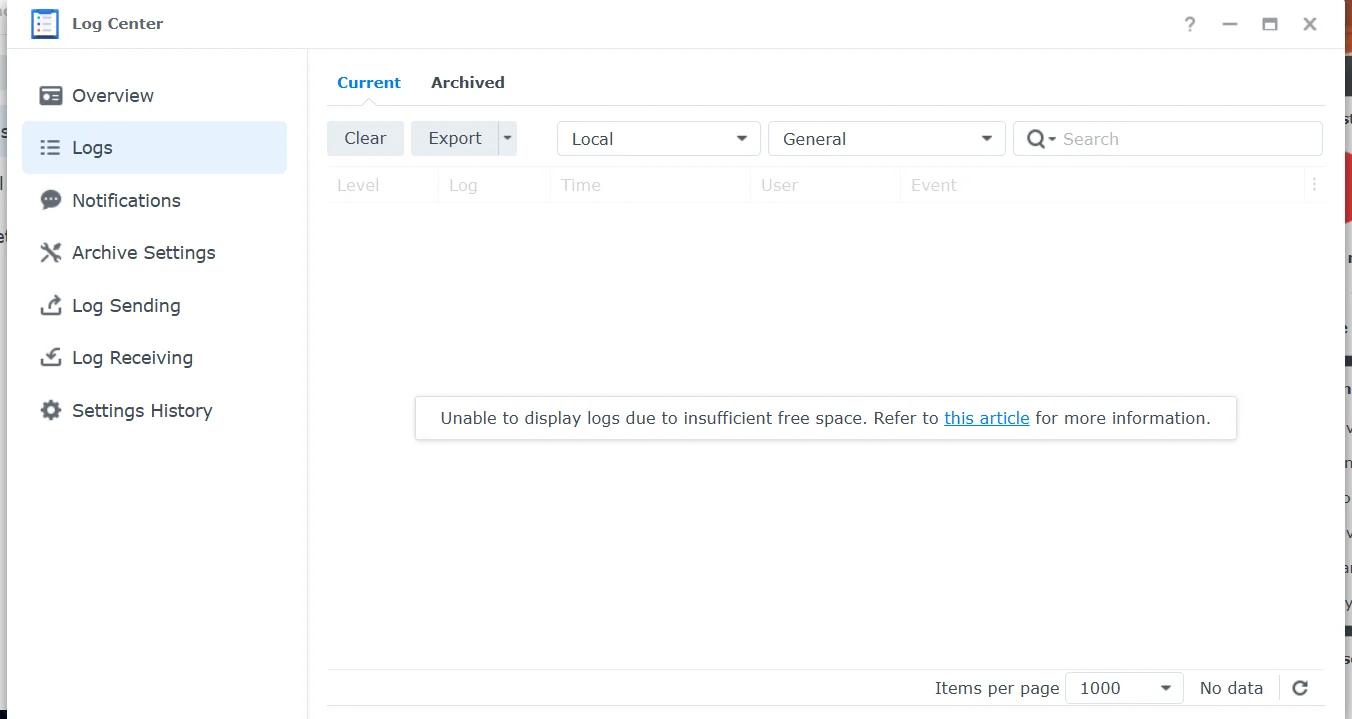

💥 The Log Center Meltdown

Things quickly took a darker turn when I noticed that Synology MailPlus Server logging was also down. Not a single log was showing up in the app, nor were any logs being forwarded to my syslog-ng server. My first instinct was to check Log Center, but it didn’t get any better. The error message it threw at me was “Unable to display logs due to insufficient free space.”

I almost laughed. I’ve got nearly 20TB of free space on the disk. So, what gives? 🤷♂️

📊 A Deep Dive into Disk Usage

At this point, I wasn’t laughing anymore. Time to dig deeper. First, I ran a quick df command to see where the issue might be hiding.

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/md0 8191352 1632072 6440496 21% / Okay, so we’re sitting at 21% used, and nothing seems out of the ordinary here. Hmm... but where the heck are the logs being stored? That’s when I discovered the truth: /var/log/synolog/ holds the critical log files as SQLite databases. Not only that, but it was a mess.

⚠️ The Database Disaster

I thought I could simply run an integrity check, like any reasonable Linux administrator would. I tried to access the SYNOACCOUNTDB SQLite database.

sqlite3 /var/log/synolog/.SYNOACCOUNTDB "PRAGMA integrity_check;"

What did I get? Disk I/O error (10). I was starting to feel like I was in an episode of The Twilight Zone. I tried a backup, but even that failed.

sqlite3 /var/log/synolog/.SYNOACCOUNTDB ".backup /volume1/Backup/backup.db"

Error: disk I/O errorSo here I was, facing a disk I/O error with no explanation, on a system that was clearly not out of space. I went deeper, hoping for a silver lining. And that’s when I discovered the bombshell: the log databases were completely corrupted. The database was toast, and there was no way to recover it... or was there?

🎉 The Sweet Taste of Victory (With a Dash of Disk Cleanup)

After hours of digging through logs and feeling like I was battling an invisible enemy, the truth finally came to light. Turns out, the SQLite databases weren’t actually corrupted—nope, it was the disk quota enforcement that caused the real havoc. The system’s hardcoded 200MB limit on the /var/log directory had kicked in, and that’s what had caused the entire logging system to fail.

Here’s how the mystery unfolded. I first ran the df -h command to check the overall disk usage. This command showed that /dev/md0, the root filesystem, had plenty of free space:

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/md0 8191352 1632072 6440496 21% / At this point, everything looked good. Plenty of space available. But when I specifically checked the space on /var/log, things started to get suspicious:

df -h /var/log

To my surprise, the output showed that /var/log was limited to only 200MB—and it was completely full:

Filesystem Size Used Avail Use% Mounted on

/dev/md0 200M 200M 0 100% / This was a huge clue. There was a hard limit enforced on /var/log that I hadn’t accounted for. At this point, I realized the disk usage in /dev/md0 wasn’t the issue—it was the small quota applied specifically to the log directory.

Now, to uncover the culprit, I ran the du command to see what was taking up all the space in /var/log:

du -ah /var/log | sort -rh | head -n 20

This showed me that the systemd.log file was consuming a massive 94MB, nearly half the entire quota for the directory. The last log entries had been appended a month ago, which meant the log file was stuck and growing without being properly rotated. Time for action!

I quickly truncated the log:

> /var/log/systemd.log

After this, I returned to the SQLite database with a bit more hope. I ran the sqlite3 command again:

sqlite3 /var/log/synolog/.SYNOACCOUNTDB ".tables"

And boom—the tables were there, and the database was accessible again. The issue wasn’t corruption at all; it was simply a case of disk quota limitation causing the logs to fail.

With that, Log Center sprang back to life, and all was well again. Synology Application Service installed without a hitch, and everything was back in action.

While I didn’t go back to Synology Photos (since I had already switched to Immich), I felt relief knowing the NAS was back on track. 😌 So, there you have it—the root cause wasn’t corruption, it was a disk quota enforced on /var/log, and once I found and cleared the log bloat, everything clicked into place.

💥 The 200MB Limit: Seriously?

Sure, I had tons of space elsewhere, but Synology had decided to set a hard limit of 200MB for the log directory. Yep, you heard that right—200MB for logging.

Well, I assume, in the world of Synology DSM, the /var/log directory is set up as part of a loopback device or a tmpfs overlay, which makes it nearly impossible to adjust. Sure, I tried to remount it using:

mount -o remount,size=400M /var/logBut nope. No dice. DSM refused to budge. That 200MB limit was hardcoded. No matter what I did, I couldn’t just resize the log partition. 🤦♂️

🛠️ Options for Fixing the Nightmare

Option A: Relocate Logs to Persistent Storage 🔄

Okay, time to get creative. The first option is to move logs to other persistent storage. Here’s how you can do it:

Create a new directory on your persistent volume:

mkdir -p /volume1/LogsRedirect/var_log

Copy current log contents:

cp -rp /var/log/* /volume1/LogsRedirect/var_log/

Bind-mount at runtime:

mount --bind /volume1/LogsRedirect/var_log /var/log

Automate it with a startup script:

#!/bin/sh

mount --bind /volume1/LogsRedirect/var_log /var/log

And then make it executable:

chmod +x /usr/local/etc/rc.d/logredir.sh

Option B: Hack the Loopback Device (Not Recommended) 🔧

For the brave (or foolish), you could try replacing the squashfs image used for /var/log, or even patch DSM’s startup sequence. But here’s the thing: it voids warranties and risks future DSM updates breaking your system. Definitely not for the faint-hearted.

Option C: Monitor, Pray, and Hope DSM Doesn’t Break Everything 🤞

For those who don’t want to get too deep into hacky solutions, just keep an eye on the logs, configure available log rotation mechanisms, and pray that Synology doesn’t break anything else.

💬 Conclusion

So, let’s wrap this up. Synology’s 200MB log limit is an absolute head-scratcher. No one should have to go through what I did just to get a few logs working. It’s a complete oversight that needs to be fixed—and fast.

The lesson? Don’t just assume things will work out. When they don’t, dig deep, and don’t be afraid to get your hands dirty. And Synology, for the love of logs, make this a configurable option next time, will you? 🔧

TL;DR: Synology’s 200MB log partition limit is a pain. But with some creative thinking and a bit of SSH kung fu, I managed to get things back on track. Now, I’m running Immich and enjoying the smooth sailing. Take it as a lesson, folks—never underestimate the importance of logs, or the chaos that can ensue when they break. 🚀